This article is more than 1 year old

OpenAI touts a new flavour of GPT-3 that can automatically create made-up images to go along with any text description

Human art will still, erm, probably prevail

OpenAI released a sneak peak of its latest GPT-3-based neural network, a 12-billion-parameter model capable of automatically generating hundreds of fake images when it is given a text caption, stylized as DALL·E.

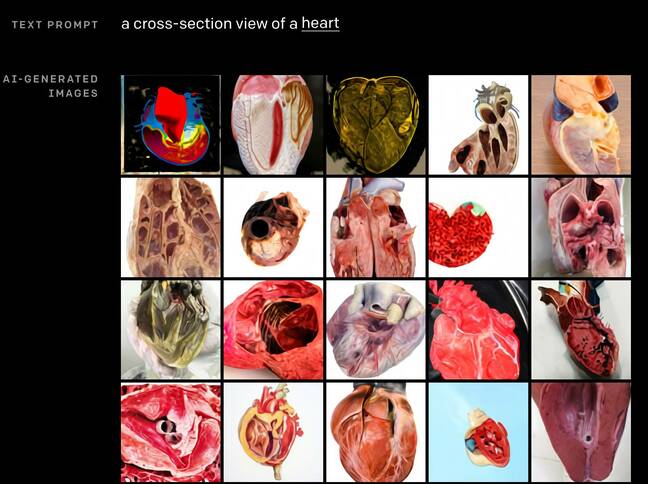

That might not sound all that interesting at first, but you have to see DALL·E in action to really appreciate it. It can create realistic images of animals, objects, or scenes. We played around with the system and here's what it spits out when it receives the prompt "a cross-section view of the heart."

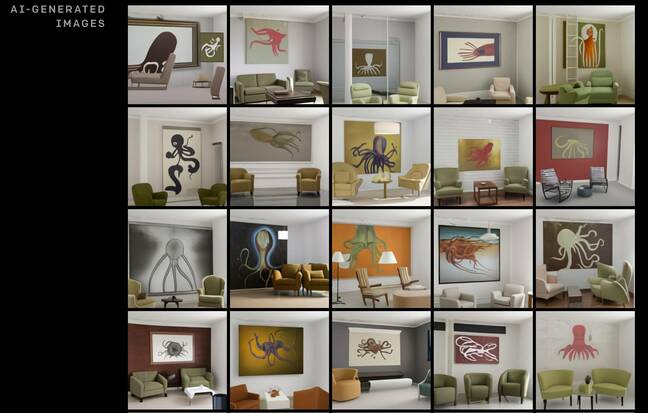

For a more complex example here's "a living room with two olive armchairs and a painting of a squid. The painting is mounted above a coffee table." Not bad, eh?

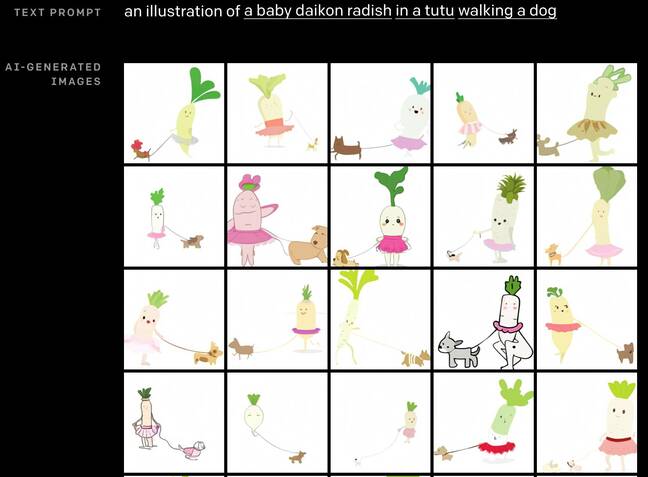

It can also come up with drawings for random, nonsensical concepts and dream up objects that probably don't exist like the "illustration for a baby daikon radish in a tutu walking a dog."

There are all sorts of weird combinations you can play with by clicking on the different options of the drop-down menu to choose individual words in the text prompt in the examples on OpenAI's blog. DALL·E was built from the massive language model GPT-3 and parses text. Instead of generating words and sentences, however, it spits out pixels and images.

It was trained with a dataset likely containing hundreds of millions of images scraped from the internet and its corresponding captions. The research lab is staying quiet about most of the technical details behind DALL·E for now, and said it plans to reveal more in an upcoming academic paper.

Although it's currently more of a curiosity than a useful tool, some believe it has the potential to disrupt creative industries. If a tool like DALL·E was commercially available, what does the future look like for designers, illustrators, artists, and photographers if a machine can do the same job faster?

"We recognize that work involving generative models has the potential for significant, broad societal impacts," OpenAI said. "In the future, we plan to analyze how models like DALL·E relate to societal issues like economic impact on certain work processes and professions, the potential for bias in the model outputs, and the longer term ethical challenges implied by this technology."

No, DALL·E does not spell the death of human art forever

Luba Elliott, a curator and researcher at Creative AI, a lab focused on the intersection of AI and creativity, told The Register that while "DALL·E could certainly have its uses as a commercial product," there would always be room for human-made art.

"Provided it can generate highly realistic images across the board, it could compete with Shutterstock or Getty Images for some use cases, depending of course on the cost per image and ease of generation. These use cases could include images for articles and blog posts, where the focus is on the writing and the image is there as a content filler.

"In terms of photographers and artists, it depends on what type of work they do. Illustrators and stock photographers may well lose some work to such tools, but we are still far off from replacing fine art photographers and artists with a distinct style and creative vision. This is because, at this stage, machines struggle to both come up with and execute truly novel ideas, frequently their output is heavily based on training data from the past and becomes interesting when shaped and given meaning to by a human artist."

Shutterstock and Getty Images did not respond our questions.

Sofia Crespo and Feileacan McCormick, digital artists who work at a studio called Entangled Others, agreed. They reckoned that companies selling stock images would probably be hit harder than photographers and illustrators themselves, but thought that DALL·E isn't yet good enough to replace real images. Its creations are simplistic and the quality fluctuates based on the wording of its text prompt.

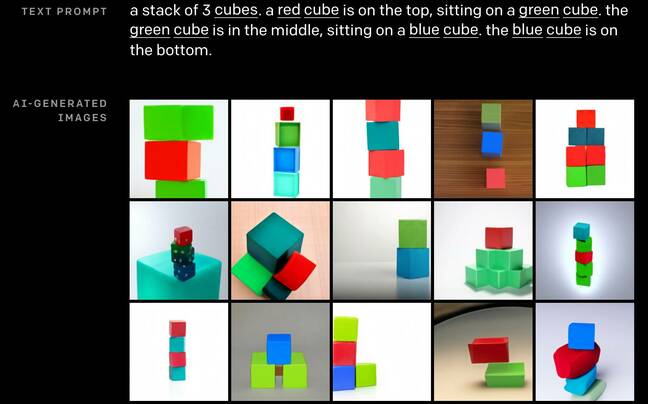

If the text input describes too many objects or is particularly wordy it can throw the machine off, making it generate incorrect images. "As more objects are introduced, DALL·E is prone to confusing the associations between the objects and their colors, and the success rate decreases sharply. We also note that DALL·E is brittle with respect to rephrasing of the caption in these scenarios: alternative, semantically equivalent captions often yield no correct interpretations," the researchers explained.

Here's an example that explicitly asks for an image that contains three cubes: a red one on top, a green one in the middle, and a blue one at the bottom. The model struggles to understand and comes up with multiple incorrect interpretations – its images contain the wrong number of cubes, and they're often stacked in the wrong order.

Like its predecessor GPT-3, DALL·E is flashy at first but not all that intelligent. The examples demonstrated in the blog post only show the top 32 images out of the 512 generated – that's means the other 94 per cent or so are hidden from view. It's likely that if all the examples were shown, the image quality would progressively degrade.

Kyle McDonald, another artist working with code, believes that tools like DALL·E are probably "at least three to five years away from generating the kind of high-resolution imagery that is needed for general-purpose stock photography. Only a few kinds of specific images like faces and landscapes are covered right now," he said.

The ranking system might not be all that bad if DALL·E was capable of creating more high-quality images, Tom White, an artist and lecturer at the Victoria University of Wellington's School of Design in New Zealand, told us. "Automating this ranking of the outputs is pretty huge and makes this system much more practical than it otherwise would be."

It gives the user the ability to automatically filter the good from the bad without having to manually sort through all of the machine's creations.

Copyright issues and bias

If something like DALL·E was to become a commercial tool, there are additional troubles on top of dwindling job opportunities for photographers, cartoonists, and the like.

Massive generative models are prone to memorising their training data. The larger the neural network, the more data needed to train it, and the more it memorises. A group of researchers led by the University of Berkeley discovered that when using GPT-2, a smaller version of GPT-3 with fewer parameters than DALL·E, they were able to retrieve things like speeches, news headlines, hundreds of digits from the number pi, verses from the Bible and Quran, and even lines of code just by feeding the model with sentences lifted from the internet. The model is good at recalling information – given a prompt it'll fill in the blanks with what it has seen before.

Since DALL·E is of the same ilk, the images it generates are also a mish-mash of what it has seen off the internet. Occasionally, it will probably create something that looks suspiciously similar to an existing drawing or photograph captured by a real artist. "The primary ethical issue with DALL·E is copyright laundering," Alex Champandard, co-founder of creative·ai, explained to El Reg. "It's trained on a large dataset scraped from the internet with no attribution. GPT language models were shown to reproduce their training content verbatim, so the legal situation here, for example of fair use, is unclear until it's tested in court."

Another glaring issue that affects all AI models that DALL·E won't escape from is bias. What if someone uses it to come up with images that are offensive, racist, or obscene? What if these types of images are generated mistakenly? "As long as there are people in the loop to filter those out, it's OK, but that's going to be a problem if DALL·E becomes a standalone automated tool," Champandard said.

The concerns remain speculative for now unless developers create copycat versions that are highly effective and widely available. But it's not too crazy to believe something like DALL·E will eventually be commercialised. Microsoft has the exclusive rights to license OpenAI's GPT-3 technology, after all. It's possible Redmond may use the model as a tool to upgrade clip art and snazz up people's Word documents or PowerPoint presentations.

Microsoft and OpenAI declined to comment. ®