This article is more than 1 year old

Intel Labs unleashes its boffins with tales of quantum computing, secure databases and the end of debugging

Machine programming and other future technology touted at Chipzilla shindig

Intel on Thursday trotted out its research scientists to talk up technology initiatives that aim to make computation faster and developers less of a liability.

In a webcast presentation of Intel Labs Day, an event typically held at a Chipzilla facility in Silicon Valley, Rich Uhlig, senior fellow, VP and director of Intel Labs, presided over streaming video interviews with various Intel technical experts on integrated photonics, neuromorphic, quantum, and confidential computing, and machine programming.

Most of these presentations focused on silicon-oriented projects, like Intel's work to develop chips that communicate using light rather than electricity, others that mimic the architecture of the human brain or that exploit quantum mechanics.

A series of confidential computing discussions also focused on trusted federated learning – training machine learning models on distributed data sets without exposing the data – and fully homomorphic encryption, which promises the ability to analyze ciphertext – encrypted data – without decrypting it.

This would be immediately appealing to data-crunching organizations everywhere, at least those genuinely interested in security and privacy, if computer scientists could devise a way to make it affordable and speedy enough.

"In traditional encryption mechanisms to transfer and store data, the overhead is relatively negligible," explained Jason Martin, a principal engineer in Intel's Security Solutions Lab and manager of the secure intelligence team at Intel Labs. "But with fully homomorphic encryption, the size of homomorphic ciphertext is significantly larger than plain data."

How much larger? 1,000x to 10,000x larger in some cases, Martin said, and that has implications for the amount of computing power required to use homomorphic encryption.

"This data explosion then leads to a compute explosion," said Martin. "As the ciphertext expands, it requires significantly more processing. This processing overhead increases, not only from the size of the data, but also from the complexity of those computations."

Martin said this computational overhead is why homomorphic encryption is not widely used. Intel, he said, is working on new hardware and software approaches, and to build broader ecosystem support and standards. It may take a while.

In the meantime, organizations can pursue federated learning using trusted execution environments like Intel's Software Guard Extensions, or SGX, provided they're comfortable with the constant security patches.

Mankind versus the machine

Intel's machine programming initiative, based on a 2018 research paper, "Three Pillars of Machine Learning," stands out among the other projects. Rather than trying to push machines to do more, it's focused on making people do less.

"If we're successful with machine programming, the entire space of debugging will no longer exist," explained Justin Gottschlich, principal scientist and the director and founder of machine programming research at Intel Labs.

Gottschlich goes on to describe Intel's "blue sky" vision for the project as one in which few people actually do what we now think of as programming. In most cases, he suggests, people will just describe an idea to a machine, engage in a bit of back-and-forth to fill in the blanks, and then wait for this AI-driven system to generate the requested application.

"The core principle of machine programming is that once a human expresses his or her intention to the machine, the machine then automatically handles the creation of all the software that's required to fulfill that intent," said Gottschlich.

Machine programming is a part of the ongoing effort to develop low-code or no-code tools, generally through some form of automation. There are a variety of reasons for doing so, mainly having to do with supply and demand.

Companies like to talk about democratizing coding, as if they should be applauded for civic altruism. They tend to be less vocal about the fact that more coders means more competition for programming jobs and the ability to pay lower salaries.

Gottschlich's spin on the supply problem is that industry trends have made the talent shortage worse.

"There are two emerging trends we observed that work against each other," he explained. "First, compute resources are becoming more and more heterogeneous as we specialize for certain types of workloads. And that requires expert programmers, what you might call ninja programmers, [who] deeply understand the hardware and how to get the most out of it."

Intel talks up its 10nm Tiger Lake laptop system-on-chips as though everything is going according to plan

READ MORE"But at the same time, software developers increasingly favor languages that provide higher levels of abstraction, to support higher productivity and that in turn makes it hard to obtain the performance that the hardware is inherently capable of," he added. "And this gap is widening."

In other words, people's passion for Python is why tech companies can't find enough C++ experts who know about GPUs, FPGAs, and other technical specialties.

Gottschlich pointed out that only 1 per cent of the world's 7.8bn people can code. "Our research in machine programming is looking to change that 1 per cent to 100 per cent," he said.

But the kind of programming he expects people will do is not what they currently do.

Imagine a painter having to first create the paintbrush, the canvas, the easel, the frame, and the paint itself before creating any actual work of art, Gottschlich suggested. "How many painters, realistically, do we think can do all of that?" he asked.

"I would argue that that number is somewhere between very few and zero. But this analogy is similar to what's required for how a lot of software is developed today."

At the same time, people are the problem. They make mistakes and that has a productivity cost. Gottschlich cited a 2013 Cambridge University study that found US programmers on average spent half their time debugging.

"Debugging is slowing our programmers down," Gottschlich said. "There's a loss of productivity. And debugging by nature means that the quality of the software isn't acceptable, otherwise we wouldn't be debugging. So in some sense this is exactly what we want to fix."

Swat those bugs

Debugging is almost always the result of miscommunication between the programmer and the machine, said Gottschlich. Machine programming, which is focused on understanding that intention, aims to correct this. "If we assume for a moment then that human intention can be perfectly captured by the machine, in theory, debugging essentially vanishes. Poof. It's gone."

In its place, we get greater productivity and higher quality software. Gottschlich said Intel has developed a dozen machine programming systems and went on to describe three of them.

The first, he said, can automatically detect performance bugs. It writes automated tests on its own and can adapt those tests to different hardware architectures. The system, called AutoPerf, is described in a paper presented at NeurIPS, "A Zero-Positive Learning Approach for Diagnosing Software Performance Regressions."

The second is called Machine Inferred Code Similarity, or MISIM. It tries to identify when pieces of code are performing the same function even if they do so in different ways.

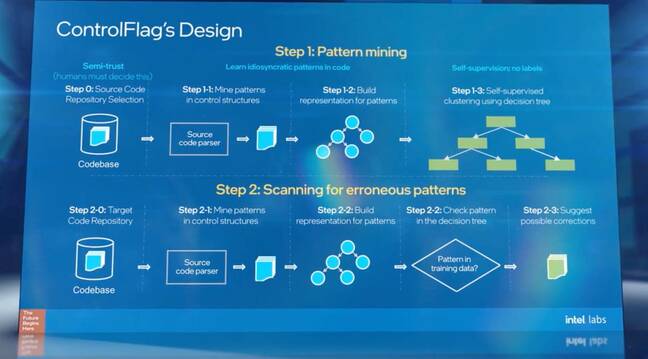

The third is another bug finding system called ControlFlag, which will be presented in a paper at NeurIPS 2020 next week. It can find logical bugs and inefficiencies not flagged by rules-based compilers – it relies on pattern-based anomaly detection that learns on its own.

For example, it was set loose on the source code for cURL, a popular data transfer library, and it found an uncaught comparison where an integer was used in place of a boolean (true/false) value.

ControlFlag learns about code without human-generated labels. "Instead, what we do is we send the system out into the world to learn about code," said Gottschlich.

"When it comes back, it has learned a number of amazing things. We then point it at a code repository, even code that is production quality and has been around for decades. And what we found is it tends to find these highly complex nuanced bugs, some of which have been overlooked by developers for over a decade."

Asked by Gottschlich how long it might be before this "blue sky vision" of automated programming is realized, MIT professor Armando Solar-Lezama, a co-author with Gottschlich on the 2018 "Three Pillars of Machine Learning" paper, expressed doubt that people will ever be cut out of the loop completely.

"I don't think there's going to be some kind of end point where we're going to be able to say, 'We are done, programming is now the thing that machines do, and we are going to spend our time playing music and fruit in the countryside," he said.

"...What I really see is this major shift in automation, and in the kind of expectations that people have about what it means to program, and the kind of things that you can delegate to a programming tool." ®