This article is more than 1 year old

End-to-end NVMe arrays poised to resurrect external storage

Who needs DAS with RDMA?

Analysis NVMe-over Fabrics arrays are performing as fast as servers fitted with the same storage media – Optane or Z-SSD drives for example. Because NVMe-oF uses RDMA (Remote Direct Memory Access) then the network latency involved in accessing external storage arrays effectively goes away.

This can be seen with performance results in financial benchmarks, genomics processing, and AI/ML applications.

Finance benchmark

The finance industry has its own specialised set of benchmarks allowing objective comparisons to be made between IT systems used in specific kinds of financial industry applications, such as tick data analysis*, for which standard SPEC and TPC-type benchmarks are not best suited.

These benchmarks are devised and controlled by STAC, the Securities Technology Analysis Centre, which has around 300 financial organisation members and some 55 vendor members.

STAC has developed a range of big-data, big-compute, and low-latency workloads focussed on its members’ business needs. Benchmarks can be published either publicly or in a STAC members-only vault.

Access to the public ones requires you to be a STAC member, and basic membership is free, only requiring registration.

The STAC-M3 benchmark is described as an industry-standard benchmark suite for tick database stacks. These HW/SW stacks are used to analyse time-series data such as tick-by-tick* quote and trade histories. STAC-M3 assesses the ability of a solution stack such as columnar database software, servers, and storage, to perform a variety of I/O-intensive and compute-intensive operations on a large store of market data.

The specifications are completely agnostic to architecture, which means that STAC-M3 can be used to compare different products or versions at any layer of the stack, such as database software, processors, memory, hard disks, SSD, interconnects, and file systems.

There are several suites: the baseline Antuco suite with forced access to storage, the Kanaga scaling suite, and the Shasta suite, with memory substitutable for storage, for example.

Antuco results are 17 mean response-time benchmarks, each of which may have multiple part values, and are published individually by vendor system submission. There is no single overall score for the 17 component tests and STAC does not publish an overall document with all 17 test scores for each vendor-submitted system.

Summary so-called STAC Report Cards for the Antuco suite highlight 11 separate tests, mostly concerning response time: some with mean, maximum, minimum, median, and standard deviation numbers, and MB/sec read.

All in all, looking up and assessing the STAC M3 data is damned hard work.

Public STAC-M3 reports can be found here and you need to add M3 domain access to your membership to access them.

There are STAC Antuco results for servers using Axellio (members-only vault), E8 storage arrays (PDF) with Optane drives, IBM FlashSystem 900 (old now - April 2016), Samsung Z-SSDs, and Vexata arrays.

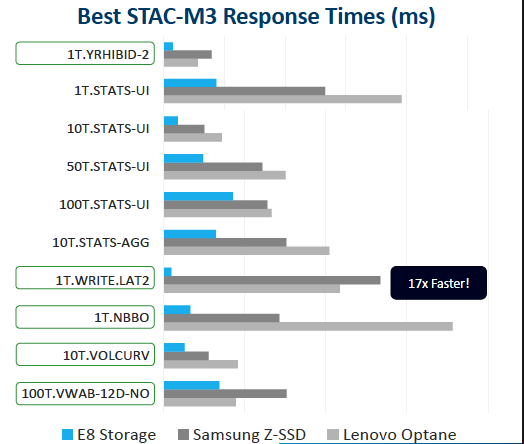

Here is a comparison between E8 external NVMe-oF storage and servers fitted with either Samsung Z-SSDs or Intel Optane (3D XPoint) drives:

E8 claims the performance of its NVMe-oF storage array, using Optane drives, is up to 17 times better than a Dell server system using (STAC test KDB180418a – PDF) Samsung Z-SSDs.

It was also faster than a Lenovo server fitted with direct-access Optane drives (STAC test KDB171010 (PDF)).

To use an analogy, the trouble here is that STAC results will tell you how a racecar performs on the many individual curves of a track, with entry and exit speeds and time through a corner but will not give you its single time to go round the course. They are highly specific to individual applications and their run types.

So E8 says its system has a faster response time in 10 of 17 STAC Antuco operations than Samsung Z-SSD and Lenovo Optane servers, with 17x the Samsung performance for the 1T.WRITE benchmark. Does that mean an E8 array will run your Oracle database application faster than a Lenovo Optane server? Not necessarily.

But these STAC results do say NVMe-oF arrays of Optane drives can perform better than – or as well as – servers with local Optane drives.

Genomics and AI/ML

A UK customer found E8’s storage, used with Spectrum Scale (GPFS), enabled it to accelerate its genomics processing 100X, from 10 hours per genome to 10 genomes per hour. The E8 array was used as a fast storage tier and scratch space.

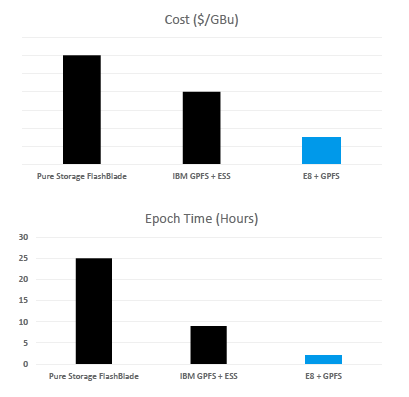

In AI/ML work E8 says its arrays feed data to and from servers fitted with Nvidia GPUs and using Spectrum Scale 4X faster than IBM’s own ESS (Elastic Storage Server), and 10X faster than Pure Storage’s FlashBlade systems.

It claims it is also half the cost of the ESS system and the chart above shows being a quarter or less of the FlashBlade cost.

E8 has said AI and ML data sets are incompatible with deduplication and compression, which can ruin performance.

This advantage over Pure using servers fitted with GPUs suggests that, were E8 to run Resnet AI benchmarks it could beat both Pure AIRI and NetApp A700 systems. The advantage should widen as the GPU count increases.

TL;DR:

If storage IO performance is the main criterion for choosing the storage element of a server or storage array then NVMe-oF levels the playing field between direct-attached storage and shared external arrays. Where end-to-end NVMe trounces SAS and SATA SSD systems, then end-to-end NVMe startups can compete on more equal ground than before with all-flash array incumbents.

When performance is king and an NVMe-oF system can speed genomics processing 100X, and shave seconds, even minutes, off financial trading analysis runs and accelerate real-time AI/ML applications – then NVMe-oF arrays and startups, like Apeiron, E8 and Excelero, get a look in the door and an invitation to sit at the bid table.

NVMe-oF array technology is becoming a must-have for every primary data storage array vendor.

What would be really, really, really fascinating would be to know the results of a bake-off between memory-caching Infinidat, which eschews all-flash systems, and an NVMe-oF array system. Can Moshe Yanai's arrays outrun NVMe-oF ones? We’ll surely see an answer to that question eventually. ®

*Gaining historical insight into data by viewing it in increments of time – or in "tick-by-tick format" (also known as tick data).