This article is more than 1 year old

Measure for measure: Why network surveys don't count what counts

Punters want reliable calls most

Interview If you can't measure something, does it actually exist?

That's a question raised by Dr Paul Carter, founder of the oldest mobile network performance operation, GWS. Carter's company has been doing labour-intensive drive-testing of the networks for over 20 years, which is not cheap, but it is thorough. The more rigorous, scientific approach means that a measurement of call quality and dropped calls can be calculated. But in recent years, a wave of "crowd-sourced" rivals have sprung up, attracted to the market because gathering data on Android phones is cheap.

Crowd-derived data can be fascinating – the ping rates and device performance Tutela gleaned here is genuinely useful. But the problem is, Carter argues, they're not measuring what actually matters most to people.

"The most important network function people want in the UK – and we gave them a long list – is making voice calls. 69 per cent of people want that. Texting is important for 53 per cent of people, and the web third, with 43 per cent. Video streaming was cited as important by just 3 per cent of punters," Carter says.

"Clearly data services are not unimportant – but the differentiator on smartphones is to make voice calls. That's still fundamentally the most important functionality of the phone."

Something else not measured by crowd performance is reliability.

"People want a reliable network more than they want a faster network," Carter adds. Combine these two areas where important stuff isn't being measured, Carter argues, and purely crowd-sourced analysis from operations like Tutela and OpenSignal falls short. Crowd-sourced data cannot test call reliability so it concentrates on measuring what it can: speed.

Rather than whingeing about the upstarts, the Shakespeare-loving founder (Carter is a Trustee of the Royal Shakespeare Company) went back to the drawing board. GWS's response is to include more subjective consumer data – drawn from interviews with punters – into the final OneScore ranking.

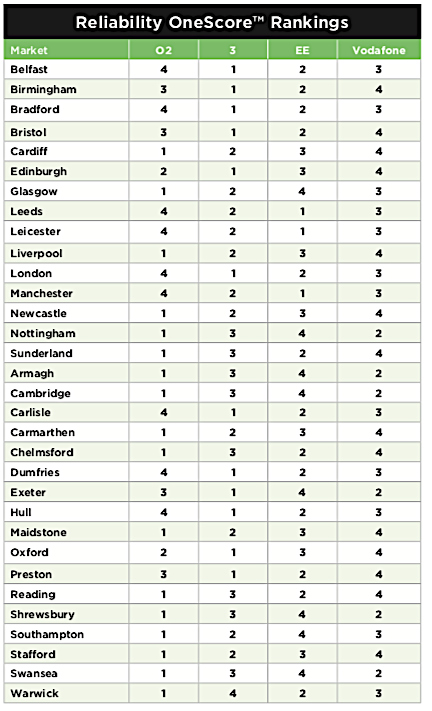

In the first survey GWS published using this ranking, there are some surprising results. EE routinely tops the crowd results because it has the fastest network – thanks to scale and spectrum – but out of 32 British towns and cities, GWS finds it's the the most reliable in only three: Leeds, Leicester and Manchester. Hutchison's Three typically ties for last when it comes to speed (usually with O2) – because it held on to 3G HDSPA+ for so long, and has the poorest spectrum allocation – but for reliability it comes top in 14 metro areas, including London and Birmingham. O2 also figures well, being the most reliable network in 17 cities including Liverpool and Glasgow.

How come its so different to crowd-derived results?

"They're looking at throughput and missing the important stuff. They're not even measuring voice calls," Carter says. "Often they're simply doing a capacity test, using multiple threads, to see what the maximum throughput is at that location. In a way that's a non-typical use case. If you were skiing there that's not something you would actually do." If a network pushes newer devices at people then it will get better results, he points out, as older phones won't support newer technologies and frequency bands.

Many of the speed tests are pointless, he argues, once a network reaches a given level. He compares it to the UK's Universities and Colleges Admissions Service.

"If I give you a target of three Bs [at A level] to get in, and you get three As, you're still in – you're not in faster, or more 'in'. Our networks are a little bit like that," he says. "A Facebook or Instagram post could arguably go half a second faster but if you look at a stopwatch, it's hard to save a tenth of a second." Neither is much use if the network isn't reliable.

Carter says GWS isn't out to downplay the importance of data, but to champion the consumer's interest.

"If people could give the networks advice it would be to make them more reliable countrywide, blanket coverage: do everything everywhere; maybe go old school and improve voice with fewer blank spots. It's the same message, over and over again."

O2 also finds itself doing well in the subjective "very satisfied" rating from punters. London suffers worst for dropped calls.

The data was gathered using the Rohde and Schwarz Freerider app – like this one – in late 2017 and early 2018, while the polling and focus groups covered almost 3,000 punters across the UK.

Tutela and OpenSignal are two recent rivals who rely primarily on crowd data. IHS Markit's RootMetrics started using crowd data but switched much more to using drive-testing. The consumers' association Which? attracted criticism for relying on crowd data for its "worse than Peru" report – this year we learned Britain is in worse nick than Armenia.

Carter points out that 81 per cent of punters report they're generally happy with their operator and the satisfaction rating is steadily improving – partly, he suggests, because VoWi-Fi capability is filling in the notspots

"If Donald Trump had an 81 per cent approval rating, he'd be doing backflips." ®