This article is more than 1 year old

The hold is filled with storage news! Grab a bucket and BAIL

Keelhauling through the week's movers and shakers

Behind every great enterprise and technology news website lies storage, humming away in the background heeded by no one. But the industry never stands still and every week El Reg is inundated with news – some significant, some less so. However, we're not solely a storage 'zine and we need somewhere to stack the shorter bits that wouldn't necessarily make a standalone story but we know you storageheads out there would appreciate. So pull up a pew, pour yourself a mug o' joe, and read on to find out about Bristol Uni's new supercomputer, Intel's SSD wins, Diablo Tech's Memory1 benchmarks, and much more.

Cohesity and Lenovo

Cohesity (secondary storage consolidation) has received global certification from Lenovo, which will deliver (resell, we think) Cohesity's software product in China. It's a good win for Cohesity.

Lenovo ITS director PengCheng Zhang said: "Cohesity's product is both a disruptive and innovative solution for Lenovo. We see a substantial opportunity to simplify data protection and NAS use cases."

Cohesity's head of corporate and business development, Vivek Agarwal, was pleased. "Working with Lenovo will allow us to continue our rapid expansion into different markets across the world, as well as at home in the United States."

Cohesity and HPE

HPE will resell Cohesity's software combined with its own enterprise-class servers and network switches starting immediately through the worldwide HPE Complete program, following a pilot program that began last December.

The system is a pre-configured, certified scale-out storage solution that spans both on-premises and public cloud infrastructures. It leverages HPE Proliant servers and networking to consolidate all secondary storage workflows – from data protection to test/dev and analytics – on Cohesity's platform.

This pre-validated system is being offered through the entire HPE channel and enterprise sales force.

DataCore

DataCore has run its sixth annual survey, looking at the impact of software-driven storage deployments within organisations across the globe. It probed for levels of spending on technologies including software-defined storage, flash technology, hyperconverged storage, private cloud storage and OpenStack.

Software-defined storage topped the charts in 2017 spending, with 16 per cent reporting that it represented 11-25 per cent of their allocated budget, and 13 per cent saying it made up more than 25 per cent of their budget (the highest of any category).

Only 6 per cent of those surveyed said they were not considering a move to software-defined storage.

Unexpectedly, the findings showed that very little funding is being earmarked in 2017 for much-hyped technologies such as OpenStack storage, with 70 per cent of respondents marking it "not applicable".

The top business drivers for implementing software-defined storage were:

- To simplify management of different models of storage – 55 per cent

- To future-proof infrastructure – 53 per cent

- To avoid hardware lock-in from storage manufacturers – 52 per cent

- To extend the life of existing storage assets – 47 per cent

One of the questions was "What technology disappointments or false starts have you encountered in your storage infrastructure?" The top three answers were:

- Cloud storage failed to reduce costs – 31 per cent

- Managing object storage is difficult – 29 per cent

- Flash failed to accelerate applications – 16 per cent

The top two environments that respondents believe experience the most severe performance challenges (where storage is suspected to be the root cause) are databases and enterprise applications (ERP, CRM, etc). DataCore reckons the need for faster databases and data analytics is driving new requirements for technologies that optimise performance and meet demand for real-time responses.

Diablo Technologies

Diablo Technologies has released a set of Memory1 flash DIMM benchmarks to show how these DIMMS make servers go faster; no need to wait for Optane XPoint, it says.

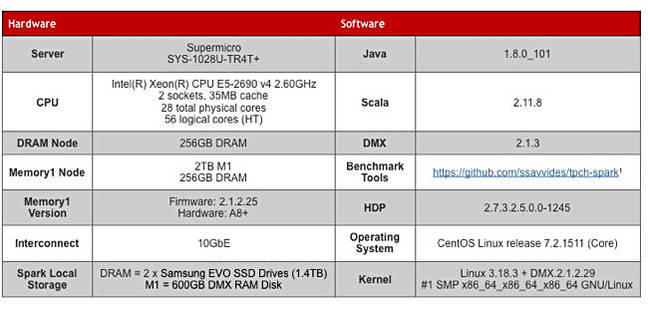

It used the TPC-H benchmark for Apache Spark SQL workloads. Benchmarks were run on the following configurations:

- 5 node DRAM cluster vs 5 node Memory1 cluster

- 7 node DRAM cluster vs 2 node Memory1 cluster

All DRAM nodes used SSD drives and all Memory1 nodes used DMX RAM Disk for Spark local storage.

Diablo Supermicro server details in Spark benchmark runs

By increasing the cluster memory size with Memory1, the servers were able to improve processing times by as much as 289 per cent while lowering the overall Total Cost of Ownership (TCO) by as much as 51 per cent.

Overall Diablo tells us that using Memory1 results in each server achieving three times the performance at approximately half the overall cost.

Why open-source Apache Spark? It enables high-speed data processing for large and complex datasets, with the Spark SQL module for structured data processing enabling SQL-like queries to be run on Spark data. Diablo says its large in-memory requirement makes it an ideal application for Memory1.