This article is more than 1 year old

Memory1: All right, Sparky, here's the deal: We've sorted your DRAM runtimes

Inspur and Diablo smash those datasets

Analysis Chinese server vendor Inspur has cut Spark workload runtimes in half by bulking out DRAM with Diablo Technologies' Memory1 technology.

Inspur and Diablo say users can achieve more work per server and reduce the time needed to process larger datasets than servers with DRAM alone.

Memory1 is a 128GB DDR4 module using NAND flash that fits into a DRAM socket, and has a portion of DRAM used as a read and write cache. Data is transferred between the cache and the Memory1 DIMM by Diablo firmware and software.

Memory1 is a way of using NAND as a DRAM extension in a server's DIMM space and so expand the memory available to an application. Diablo says that, with it, a server that could have up to 512GB of DRAM could also have up to 2TB of memory (DRAM + NAND) by using Memory1 DIMMs.

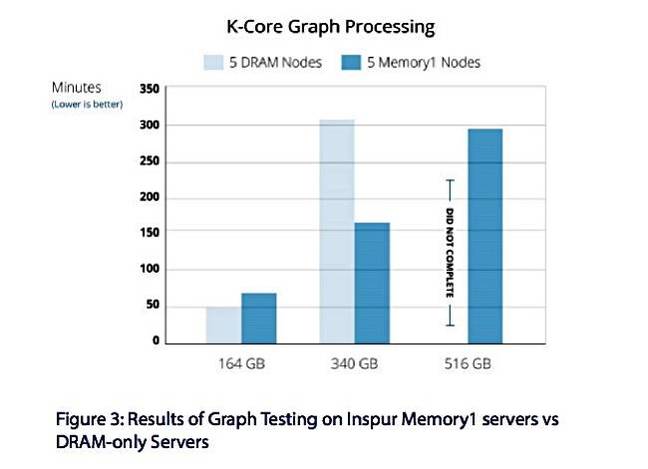

Inspur and Diablo ran a Spark benchmark using the memory-intensive (v 1.5.2) k-core decomposition algorithm of Spark's GraphX analytics engine. The algorithm was run against three graph datasets of varying sizes:

- 164GB set of 100 million vertices with 10 billion edges

- 340GB set of 200 million vertices with 20 billion edges

- 516GB set of 300 million vertices with 30 billion edges

For this they used a cluster of five Inspur NF5180M4 servers fitted with:

- Two Xeon E5-2683 v3 14-core processors

- 256GB DDR4 DRAM

- 1TB NVMe SSD for storing graph data

- 2TB of Memory1 for certain test runs

Tests were run using DRAM only and then again with DRAM plus Memory1.

With this configuration Diablo says: "We can fit essentially all of the initial data and the working data in Memory1, while the DRAM servers will need to continually persist data to the NVMe drives."

Inspur/Diablo DRAM-only and DRAM+Memory1 Spark GraphX tests

The 164GB set test showed Memory1 adding to runtime (67 mins), with the DRAM-only cluster running 27 per cent faster (50 mins). The 340GB set showed it cutting runtime by around 50 per cent, with the DRAM cluster taking five hours and six minutes, and the Memory1 cluster needing 2 hours and 36 minutes.

With the largest, 516GB data set, the Memory1 cluster took four hours and 50 minutes. The DRAM cluster did not complete the test at all as the system was exhausted of resources.

Diablo points out that the IO burden was much higher in the DRAM-only servers when running the test. By bulking out memory with NAND DIMMs this IO load was cut, saving runtime. But life isn't simple as the extended runtime for the Memory1 servers on the 164GB set test shows.

Comment

A Diablo spokesperson tells us that the market opportunity for Memory1 has improved in the last six months because: "Emerging technologies for storage-class memory (SCM) have been delayed, providing Diablo an even better opportunity going forward."

This thought suggests that SCM, when it arrives, will cut into Memory1's addressable market. Possibly Diablo could re-implement Memory1 using SCM instead of NAND but, we're fairly certain, Intel will be looking at how servers could use 3D XPoint DIMMs and there's Memory1 as an example of how to do it.

For now, in the application bounds illustrated by the Spark Graph engine run, Memory1 looks like a viable way to cut runtimes in half or more when a memory-intensive app needs to do external drive IO.

The Inspur-Diablo Spark test runs are documented in this PDF. ®