This article is more than 1 year old

Yes, just what they need: Curious Dr MISFA injects a healthy dose of curiosity into robots

Algorithm aims to make 'droids more autonomous

Computer scientists in Germany hope to make humanoid robots smarter by programming the 'droids with a sense of “artificial curiosity.”

The bots gain their inquisitiveness from an algorithm dubbed Curious Dr MISFA, which is described in this paper [PDF] that appeared on arXiv this month. The software uses unsupervised and reinforcement learning techniques to try to make the learning process more autonomous.

The number of autonomous robots is projected to grow as the intersection between robotics and AI deepens. As machines begin to surpass humans at certain tasks, governments worldwide have begun to worry about potential job loss as robots threaten to take over.

But these concerns are premature. It requires a lot of time and effort to build a dexterous robot capable of performing a task autonomously.

Reinforcement learning (RL) is the leading technique used to teach agents the best way to navigate environments by enticing them with rewards. DeepMind and OpenAI are both heavy players in RL. Last year, both companies open-sourced DeepMind Lab and Universe – virtual gyms for developers to train their AI systems to play games. Agents have to learn through trial and error how to play a game well to get high scores.

Although RL is effective in simulated environments, it’s difficult to apply it in robots acting in the real world where the surroundings are more complex. Rewards are also more scarce and difficult to achieve by random exploration alone.

Professor Laurenz Wiskott and Varun Raj Kompella, researchers working at the Institute for Neural Computation at Ruhr University Bochum, want to solve that problem with their Curious Dr MISFA algorithm. It stands for Curiosity-Driven Modular Incremental Slow Feature Analysis, and the code for their algorithm has been made public online. “[It’s] based on two underlying principles called artificial curiosity and slowness,” the paper said. The first component motivates the robot to explore its environment and it’s rewarded when it “makes progress learning an abstraction.” The slowness rule updates the abstraction by “extracting slowly varying components” from raw sensory data.

Watching too much TV

Like most papers, it’s padded with jargon. But to make it easier, imagine a robot watching TV. At any time, it can extend its nimble robo fingers and press buttons on a remote control to flick through channels. What does it choose to watch? And what can it learn?

Enter Curious Dr MISFA. The algorithm means the robot can decide what channel to watch, for how long, and what it wants to learn without being explicitly told.

“The abstractions that it would learn will depend entirely on the data within each ‘channel’,” Kompella told The Register.

An abstraction can be thought of as a feature in a particular environment. Let’s say the robot was watching a documentary on zebras. An example abstraction would be encoding the position of the zebra in the field, Kompella explained.

Curious Dr MISFA works in three parts:

- A “curiosity-driven reinforcement learner” decides when the robot should stay on a channel or switch by learning the easiest abstractions first and the hardest ones last.

- Difficulty is judged by how long it would take to learn the abstractions from each channel. Information about an abstraction is uploaded via “adaptive abstraction.”

- The “gating system” decides when to stop updating and store the information, preparing the robot to learn a new abstraction. Once the abstraction is encoded, the robot gets a reward.

The idea of teaching robots to watch TV seems trivial, but if Curious Dr MISFA can be applied in more complex environments, it may prove useful. The idea is that the robot will know what to focus on and what actions to take, without being told.

“The algorithm could be useful to make, for example, a humanoid robot pick up abstractions such as extract positions of the moving objects, topple an object, grasp or pick and place an object,” Kompella said.

Real world is too complicated

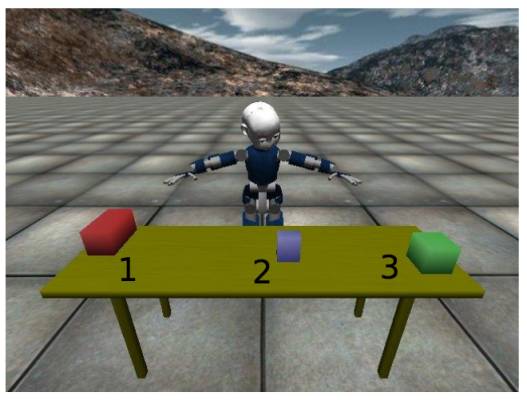

It’s important to note that the algorithm has yet to be tested on a robot in a real-world environment; it’s not sophisticated enough yet. The researchers have only tested their algorithm on vision simulation software for the humanoid robot, iCub.

Screenshot from the iCub simulation software (Photo credit: Kompella and Wiskott)

Three objects are placed on a table, and their positions continuously move. iCub sees this as a series of grayscale images that change over time. It explores by rotating its head to see the objects.

The algorithm is still pretty basic. Although the iCub robot learns the position of the first and third object, it can’t seem to learn the second object, as the positions it changes to are too erratic and do not follow a rigid rule.

Learning the abstractions – or positions of the objects – means they can be used by the iCub to interact with objects in a predictable way, the paper said. ®