This article is more than 1 year old

Here are the graphics processors cloud giants will use to crunch your voices, videos and data

Well, in Nvidia's dreams, anyway

If you think you can build the next Youtube, Facebook or Google, you'll need to invest heavily in artificial intelligence and GPU-accelerated engineering to get any kind of edge over rivals.

And Nvidia just so happens to have some hardware for you, or so it says.

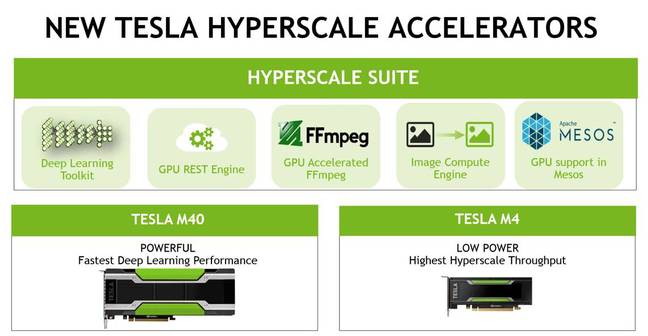

The graphics chip giant has revealed a Tesla M40 GPU accelerator, designed to power machine-learning systems that can make sense of the petabytes of nonsense humanity churns out every day. It's aimed at improving and training voice-decoding, face-recognizing, image-processing deep neural networks.

Nvidia is also touting its Tesla M4 GPU accelerator, a light-weight chip for processing video streams and serving as the front end to machine-learning systems.

Google is a huge user of machine-learning: its translation tool relies on it, for example, as does its search engine. Google is known to be using GPUs to accelerate its processing of information, allowing people to command their Android phones by voice, for instance.

A well-placed source told us last week the web goliath is using customized graphics processors tuned for particular applications. Google is not alone in wanting GPU muscle to power its systems – rival web giants, so-called hyperscale players, want in on the technology, so Nvidia's producing the chips for them.

The M40 has 3,072 CUDA cores, a peak single-precision performance of 7TFLOPS, 12GB of DDR5 memory, 288GB/s of bandwidth, and consumes 250W of power. It's attached to hosts via PCIe. The M4 has 1,024 CUDA cores, 4GB of DD5 memory, 88GB/s bandwidth, a PCIe low-profile form factor, and consumes 50 to 75W. The M4 is aimed at banks of servers handling requests from the internet – such as transcoding video, processing photos and video on the fly, and performing machine-learning inference.

"Major providers have been asking for this," Ian Buck, Nvidia's veep of accelerated computing and CUDA inventor, told The Register.

This isn't the kind of kit you order for your home PC: the keyword here is hyperscale. If you've got tens of thousands of machines, this is the stuff you'll order – well, if Nvidia gets its way, anyway. As well as this hardware, there's also a hyperscale suite of software that includes cuDNN, a GPU-accelerated FFmpeg, Nvidia's GPU REST engine that hooks up web apps to accelerators, and Nvidia's image compute engine, which can do image resizing faster than application processors. Mesosphere is also adding support for graphics chips to its Mesos data center operating system.

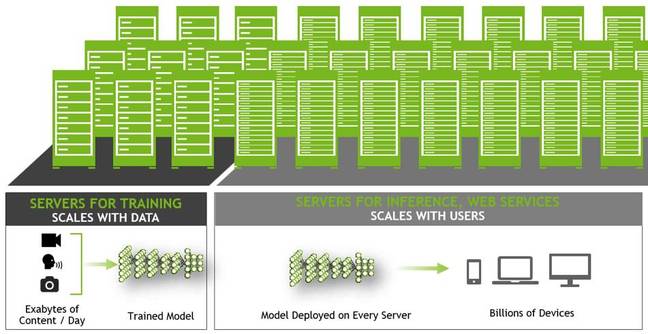

Game of halves ... Nvidia's idea of data center balance – powerful systems to train models in the backend, lighter accelerators in the front end

The Tesla M40 and hyperscale software suite are due out later this year. The M4 GPU will be available in the first quarter of 2016, we're told.

"The artificial intelligence race is on," said Nvidia CEO Jen-Hsun Huang.

"Machine learning is unquestionably one of the most important developments in computing today, on the scale of the PC, the internet and cloud computing. Industries ranging from consumer cloud services, automotive and health care are being revolutionized as we speak." ®