This article is more than 1 year old

HPC kids: Give us your power-sipping, your data-nomming, your core laden clusters

Cluster config compo students do battle

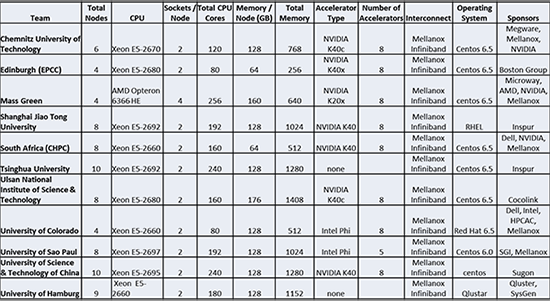

HPC blog Let’s look at what the teams were running at the ISC’14 Student Cluster Competition. This year, we had perhaps the widest variety of systems in terms of size, and also an entirely new configuration that we hadn’t seen in any competition to date.

HPC blog Let’s look at what the teams were running at the ISC’14 Student Cluster Competition. This year, we had perhaps the widest variety of systems in terms of size, and also an entirely new configuration that we hadn’t seen in any competition to date.

The Mass Green team, with students from MIT, Bentley University, and Northeastern University, broke Team Boston tradition with their quad-socket, core laden, monster cluster. To maximize CPU core count, they went with with 16-core AMD Opteron processors.

The result was a competition-topping 256 CPU cores, which is considerable. But, in typical Mass Green style, they didn’t stop there. They also added eight NVIDIA K20x (low profile) GPUs into the mix. However, they only had 640 GB of memory, which might hurt on some of the apps.

I think that they might be onto something here. Using quad-socket boards will keep more of the processing local to the node, reducing or eliminating the hops needed to process some of the tasks.

However, this might be a better approach at a competition like SC, where the teams run multiple applications at once. At the ISC competition, teams typically only work on one app at a time, putting less of a premium on application placement.

Small is beautiful... or is it?

On the “small is beautiful” side of the ledger, both Edinburgh and Colorado used four traditional dual-socket nodes. Colorado, due to the power cap and a twitchy PCIe slot, only used three of their nodes during the actual trials. This allowed them to run with dual Intel Phi co-processors on each of their three nodes.

Edinburgh took the NVIDIA road, sporting eight K40x GPU cards. They also have another trick up their sleeve – liquid cooling. Working with their Boston Group sponsor, Team Edinburgh is driving a four node, liquid cooled cluster, with only 80 CPU cores and the lowest main memory in the bunch (256 GB).

They were obviously relying on the added punch they’ll get from their GPUs. This should really help them nail LINPACK, but would this system perform well on the scientific applications? Also, would liquid cooling give them enough thermal headroom to push their undersized system to the point where they could outperform the larger systems?

How about some old school?

Team Tsinghua, a veteran team and former ISC Champion, brought a ten node cluster with 240 CPU cores, and 1.25 TB of RAM to the competition. One thing missing from their configuration? Accelerators.

Tsinghua was one of the first teams to effectively use GPUs, but eschewed them in their ISC’14 rig despite riding a brace of NVIDIA K40’s to a second place finish at China’s ASC’14 competition just a few months ago.

The team told me that they weren’t able to get their hands on GPUs in time for the trip to Germany, but that they hoped to make up for the deficit in number crunching power by having more CPUs in their box, and doing a better job of tuning their cluster for the HPC apps.

Newbie team Hamburg also went the old school route. Their nine node cluster is crammed into a ruggedized case on wheels. To me, the case looked like something a professional polka band might use to transport their accordions on a long multi-country tour. However, it did an admirable job of holding all of their gear, and it’s optimized for a quick getaway as well.

Team Hamburg is the only team using Qlustar (pronounced “clue-star”) as their cluster operating system. They tout it as a quick and easy, yet powerful, o/s that makes setting up and managing HPC clusters a walk in the biergarten. You can check Qluster out here (https://www.qlustar.com/)

Coffee Table of Doom 2.0

The other German team, Chemnitz University of Technology, went back to the drawing board after their first Coffee Table of Doom cluster (four workstations crammed with a grand total of 16 accelerators) proved to suck up way too much power to be competitive. Coffee Table of Doom 2.0 includes six workstations, each with 128 GB RAM, plus 8 NVIDIA K40c accelerators scattered around. The team should be able to keep this system under the 3,000 watt power cap, unlike its predecessor.

<h3The sweet spot?

The rest of the 11-strong field are sporting similar systems, chock full of Xeons, accelerators, and plenty of memory.

Team Brazil (University of Sao Paulo) picked up SGI as a sponsor for their trip to ISC and also upped their configuration considerably from what we saw at ASC’14. In Leipzig they drove an eight node, 192 core cluster, packed with a terabyte of RAM and five Intel Phi co-processors. Unlike their outing in Guangzhou, this should be enough hardware to allow the team to hang out with the big boys.

Team South Korea, representing South Korea’s Ulsan National Institute of Science and Technology, also put together a larger system than they used at ASC’14 last spring.

We also see that the team has had a change of heart when it comes to accelerators. In their ASC’14 competition system, they were using six nodes with five NVIDIA Titan GPUs. At ISC’14, they’re coming with eight beefy nodes plus eight NVIDIA Tesla K40c GPUs. This should work out better for them, I suspect.

The third team from China, the University Of Science & Technology of China, has the biggest Chinese system with 10 nodes, 1.28TB of memory, and eight NVIDIA K40c GPUs. The team is sponsored by Sugon, which seems to be the public face of Chinese computing powerhouse Dawning.

According to the team, they’ve received not only a lot of hardware from Sugon, but also plenty of technical help and expertise. It’ll be interesting to see how this team does in the overall competition, but also how it does against the other Chinese teams, who are sponsored by another very large Chinese computing company, Inspur.

Former champs clash

Team South Africa, the returning ISC’13 champ, and Team Shanghai, the recent ASC’14 winner, are both considered favorites to take the Overall Champion crown at ISC’14. South Africa has an eight node, 160 core cluster, with eight NVIDIA K40c accelerators. Shanghai also has eight nodes, but is using a 12-core Xeon, giving them 192 total CPU cores in their system. Shanghai is also running eight NVIDIA K40c accelerators.

Another key difference between the two is memory. Shanghai, with a terabyte of RAM, is sporting twice the 512 GB memory on the South Africa cluster. As one long-time cluster observer put it, “More memory is more better.”

In our upcoming articles we'll have video interviews with each of the teams. Watch and listen as each team discusses their hopes and dreams, their fears and what keeps them up at night, and how they’re going to stay under that damned 3,000 watt power cap. ®