This article is more than 1 year old

Did Linux drive supers, and can it drive corporate data centers?

The place where Linus gets his paycheck says the Penguin was driving

Analysis Like many new technologies, the Linux operating system got its big break in high performance computing. There is a symbiotic relationship between Linux and HPC that seems natural and normal today, and the Linux Foundation, which is the steward of the Linux kernel and other important open source projects – and, importantly, the place where Linus Torvalds, the creator of Linux, gets his paycheck – thinks that Linux was more than a phenomenon on HPC iron. The organization goes so far as to say that Linux helped spawn the massive expansion in supercomputing capacity we have seen in the past two decades.

It is not a surprise at all that the Linux Foundation would have such a self-serving opinion, which it put forth in a report released this week. That report slices and dices data from the Top500 supercomputer list, which was established 20 years ago and ranks the largest supercomputers by their sustained performance on the Linpack Fortran benchmark test.

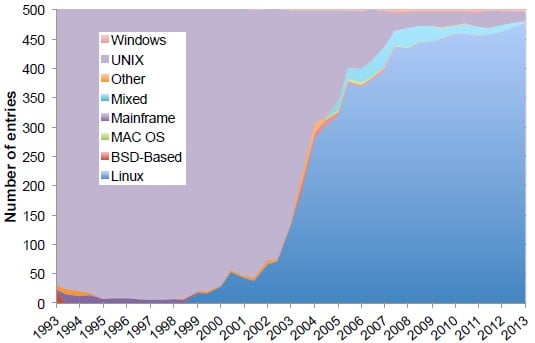

And as you can see, over the past two decades, Linux has come in and essentially replaced Unix as the operating system of choice for supercomputers. Here is the ranking of operating systems by machine count on the Top500 list since 1993:

Linux has come to dominate the top-end of the supercomputing racket in two decades

Basically, Unix and Linux have flip-flopped market shares over the two decades that the Top500 ranking has been around. And depending on how you want to look at it, it has been a meteoric rise for Linux and an utter catastrophe for Unix.

So how did Linux come to dominate the upper echelons of supercomputing?

"By offering a free, flexible, and open source operating system, Linux made it cost effective to design and deliver custom hardware and system architecture designs for the world's top-performing supercomputers," write Libby Clark and Brian Warner of the Linux Foundation. "As a result, the proportion of computers running Linux on the Top500 list saw a meteoric rise starting in the early 2000s to reach more than 95 percent of the machines on the list today."

It is certainly true that Cray, Silicon Graphics, IBM, Sun Microsystems, Digital, Convex, and others charged big bucks for their Unix or proprietary operating systems, but Linux took off not just because it was cheap and malleable.

Linux and the Message Passing Interface (MPI) method of distributing work and data across a cluster of machines both got their start in 1991, and had Torvalds decided to do something else, we might be talking about the rise of one of the open source BSD Unixes instead of Linux in this story.

But Linux did something that BSD did not: it caught the attention of hackers, academics, IT vendors, and became a practical alternative for any of the Unixes for a modestly powered server by the mid-1990s if you knew what you were doing.

This is also when Beowulf clustering, based on MPI and other messaging and collective systems software, became a practical alternative to build modestly powered parallel supercomputers.

By the end of the 1990s, Linux and MPI were not only cheaper bets, but were safer ones because all of the major systems vendors were promising to port at least one and sometimes more flavors of Linux to their various platforms. And not just x86 platforms, but any captive processors they might etch and weld into systems.

El Reg would contend that had Linux not existed, another open source Unix operating system would have risen to fill the void that Linux filled, because as the Linux Foundation has correctly pointed out in its rah-rah paper, no one can afford to pay list price for a software license or a support contract for an operating system on a machine that has thousands of nodes.

And if the national labs, academia, and enterprises with HPC systems had coalesced around an open source Unix, this could have filtered down to the rest of the systems business from the top. But as it was, Linux came at supercomputing from the bottom, and its champion Linus Torvalds caught the imagination of people at just the time that open source software was ready to go mainstream and challenge both Unix and Windows – and at just a time, during the dot-com boom, the major server makers (including those with HPC systems) wanted to look cool.

This story is always the same. Unix came out of AT&T and academia, and gradually became a safe option by the mid-1980s for workstations and then for these new kind of network-connected systems called servers. And Unix machines raised hell and ate into the systems business – including HPC systems – from below.

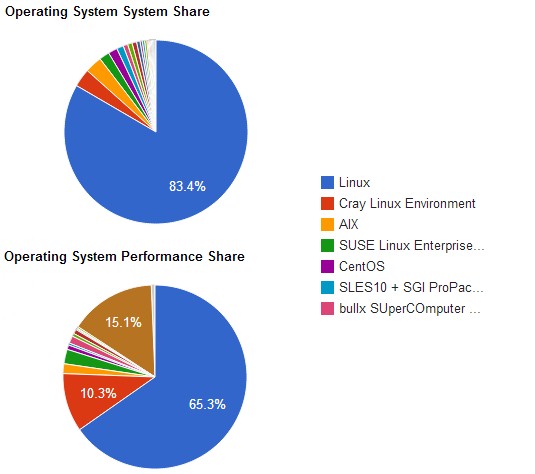

While it is clear that the Top500 supercomputers run Linux for the most part, which Linux is largely a mystery. Take a look at the distribution of operating systems from the June 2013 list, by both system count and by sustained Linpack performance:

Linux, as a group of different OSes, dominates the Top500 supers

Of the 500 machines on the list, 417 of them are running "Linux," which could be any Linux at all. SUSE Linux went through the top hundred machines on the list and reckons that about a third of them are running one or another variant of SUSE Linux Enterprise Server, including the modified versions peddled by Cray and Silicon Graphics.

The company tells El Reg that it is very hard to say how many of the remaining 400 machines on the list are running SLES or the development version, OpenSUSE. Only 83 of the machines mention their OS release by name.

This is not exactly good data, but even across those 83 machines, there is a wide variety of Linux types, which have been tweaked and tuned by vendors to match their iron – just as you would expect from national labs and academic centers.

It would be interesting to see a distribution of Linux distros among the 269 commercial customers on the Top500 list. All but one of them run Linux, but again, we can't see from the Top500 data which particular Linuxes.

Are enterprises any more likely to pay for Linux support than the national labs? Do they do what smartasses in running small businesses do, which is to buy one or two licenses for support for a few nodes and then leave all the other ones naked? Do they get killer discounts on Linux support contracts, like IBM cooked up with Red Hat last year to put Enterprise Linux on its supercomputers and clusters? If you paid list price for RHEL for a BlueGene/Q system, a rack would cost you $1.1m, or $1,350 per node, per year. But the special pricing IBM was offering dropped it down to a nickel under $44 per node.

And that is probably too expensive for some HPC shops.

The Top500 is interesting as a leading indicator, and has predicted the decline of Unix in commercial data centers – a slide that is going on as we speak and shows no sign of abating.

Unix used to be about half of server revenues and a relatively small slice of unit shipments a decade and a half ago, and now about 155,000 machines out of the 9.67 million machines sold in 2012 were running Unix, and Unix is about 16 per cent of the $52.5bn in revenues (that is Gartner data).

Linux, if you believe the operating system distributions done by IDC, is about a fifth of worldwide revenues and is still growing its share. But it is not growing fast enough to knock Windows off its perch – not by a long shot. Windows has the dominant revenue share at the moment (right around 50 per cent), and accounts by far for the lion's share of server shipments (much more than 50 per cent, but IDC does not say in its publicly accessible data).

So, yes, Linux has taken over HPC and it is doing very well on new big-data jobs like Hadoop. And it is also the preferred platform for hyperscale data center giants such as Google and Facebook. But is has a long way to go before it dominates the enterprise data center like it does these markets.

If enterprises want to build applications more like Google and Facebook – and there is every reason to believe that the kinds of scalable, distributed apps that these companies build are the harbinger of the future, much as Beowulf clusters were for parallel supercomputing 15 years ago – we may see Linux, or rather all of the Linuxes as a collective, kick it into overdrive. ®