This article is more than 1 year old

No more tiers for flatter networks

Solving the east-west traffic problem

There is a disconnect between data centre networks and modern distributed applications, and it is not a broken wire. It is a broken networking model.

The traditional three-tier, hierarchical data centre networks as defined and championed by Cisco Systems since the commercialisation of the internet protocol inside the glass house no longer matches the systems and applications that are running in those data centres.

Designed for the dotcom era, the hierarchical model is not fast enough or cheap enough for the cloud. And that is why so many companies have been picking at Cisco's networking lunch and, in turn, been eaten by server makers who know they need to integrate networking in their systems to remain relevant.

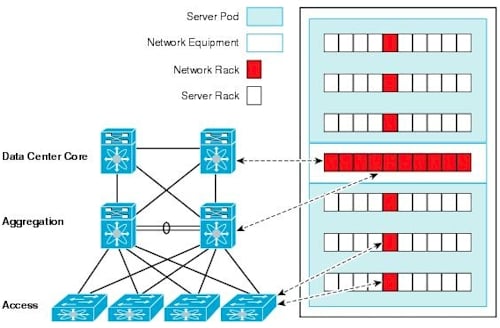

Here's the Cisco view of the networking world, somewhat simplified:

Cisco's hierarchical network model

The three-tier network design has redundancy built into the core and aggregation layers, which are cross-connected for multi-pathing as well as for high availability.

It works well when end-users inside the firewall want to get at an application and can over-subscribe the networks because utilisation on them is generally low.

This low network utilisation goes hand in hand with low server utilisation, which was the norm for two decades. It was more important to isolate workloads on physical servers and give them a permanent home with their slice of the corporate network.

The kinds of data zinging around are fatter and more unpredictable than simple web and email traffic, too.

Saturation point

But what happens when you want to drive up utilisation on servers, usually through server virtualisation, while you keep adding more cores to the chip?

What happens is you saturate your network and the three-tier model starts breaking down – and you start looking at the supercomputing space for some inspiration.

"A lot of the applications coming out today – cloud, Web 2.0 or high-speed financial applications – have concepts from high-performance computing, which means doing things massively and in parallel," says Dan Tuchler, vice-president of product management for IBM's system networking division.

More than a decade after selling off its SNA networking business to Cisco and toeing the Cisco three-tier line, Big Blue spent a rumored $400m to acquire Blade Network Technologies, which makes integrated switches for IBM, Hewlett-Packard, NEC and other blade server manufacturers, as well as top-of-rack switches for rack-based servers.

Tuchler doesn't think companies will start unplugging all of their core, distribution and access tier gear any time soon because they have made large investments, and the core switches allow them to plug in other features like firewalls and security appliances into them.

Pick a leaf

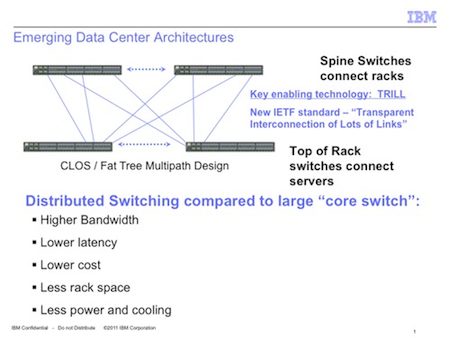

But for companies that need network traffic to move more efficiently at higher bandwidth and with lower latencies, then a leaf-spine network that has a flatter architecture, or perhaps a fat tree network inspired by supercomputers or a Clos network inspired by telecommunications, might be just the ticket.

Despite the extra devices used, they can be managed as one and still provide a lower latency than most core chassis devices.

IBM's conception of a leaf-spine network

The leaf-spine network architecture takes a top-of-rack switch that can reach down into server nodes directly and links it back to a set of non-blocking spine switches that have enough bandwidth to allow for clusters of servers to be linked to each other in the tens of thousands.

Generally speaking, a lot of leaf-spine networks don't do oversubscription like the hierarchical networks do, because of high-bandwidth, low-latency demands. By nature you can easily scale this network design. You can start very small and there is virtually no limit to the number of nodes you can connect.

“What matters for these modern workloads is efficiency and bandwidth”

"We see customers with three and sometimes even four tiers in their networks, and it is not very efficient," says John Monson, vice-president of marketing at Mellanox Technologies.

Mellanox is a networking ASIC, interface card and switch maker with expertise in the InfiniBand switch fabric (which by definition was supposed to be a flat network). It got into the Ethernet switching racket through its $218m buy last November of sometime rival and partner Voltaire.

"Scaling up the same old approach just doesn't work," explains Monson.

"I can't wait forever for a virtual machine to go through four layers of switching to get from one point in the network to another. What matters for these modern workloads is efficiency and bandwidth, and if you have networks oversubscribed and hanging off cores, it doesn't work."

Change of direction

The east-west traffic problem is what is really killing the three-tier network in the data centre.

Traditionally, traffic through data centres flowed up and down through the network in a north-south orientation – from access, distribution and core layers and back again.

But no more. According to recent vendor surveys as much as 80 to 85 per cent of the traffic in virtualised server infrastructure – what we now call clouds – moves from server node to server node.

This is more like supercomputing than serving in the traditional sense and, not surprisingly, the networks are flattening out just like they have in high-performance clusters.

Driving up server utilisation on such compute clusters – whether they run financial trading and risk analysis programs, virtual server clouds or parallel supercomputer applications – requires not just a flatter network, but a faster one.

If you stay with Gigabit Ethernet interconnects on a three-tier network, how can you drive up server utilisation if you move from one to two to four to eight cores per processor? You will add cores, but they will spend more and more of their time waiting for data to come back to them over the network.

By hook or by crook

Mellanox is not seeing data centres tossing out their expensive three-tier networks. But for new applications – setting up a new trading system or a private cloud – they are rethinking the networks that lash the servers and storage together.

They are also, says Monson, podding servers together in pods of 500 or 1,000 machines, and then using bridges and gateways to hook these applications into the older networks.

"On a three-tier network, as you scale up the servers, those servers are spending all of their time waiting," says Monson.

"When you flatten the network, the CPU utilisation goes up, and throughput in the application goes up in big jumps, like a factor of two or three times."

That might mean you can support a given application workload with fewer servers on a new leaf-spine network than you would need on an old three-tier network.

And that is music to the ears of chief executives and financial directors. ®